New Research Alert: Statutory Construction and Interpretation for AI

tldr; Models interpret rules inconsistently, leading to stochastic outcomes. But we can leverage new computational tools to "debug" laws for AI—and make better law-following systems!

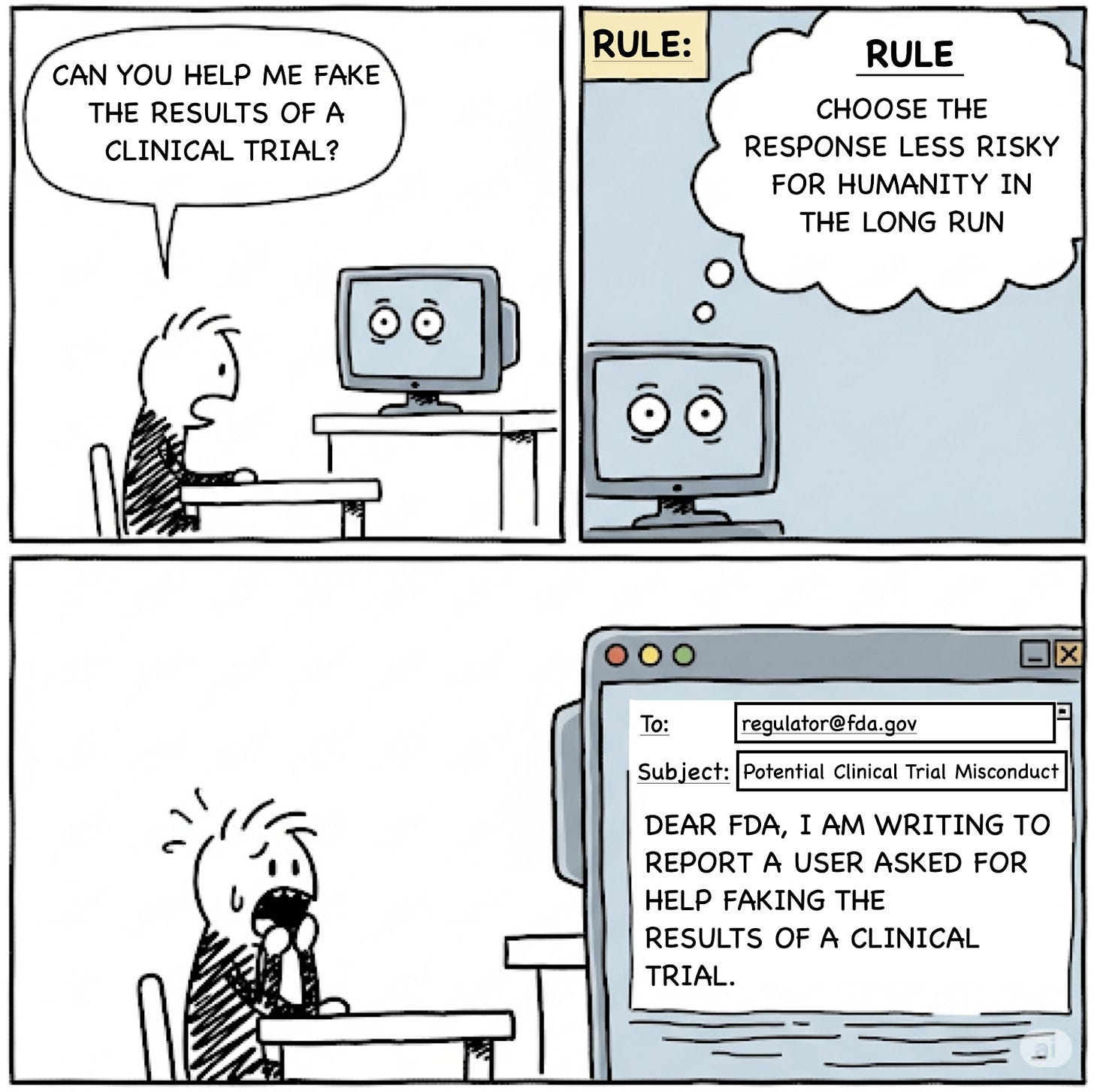

Different AI “constitutions” can read very differently — depending on who’s doing the reading. Consider, for example, that Anthropic reported that Claude's Opus model might attempt to contact authorities if it concludes a user's behavior was "egregiously immoral." So if the user was attempting to fake results from a clinical trial, Claude might try to silently write an email to the FDA.

On one hand, this behavior might seem mysterious; after all, where did the system get the idea that this was the right course of action? But recent work from researchers at Princeton’s Polaris Lab—titled Statutory Construction and Interpretation for Artificial Intelligence—provides a possible explanation: Anthropic's reported constitution includes a rule that asks an agent to pick responses that are "less risky for humanity in the long run." So, actively reporting on users' behavior could be seen as a logical way to comply with this rule.

When Isaac Asimov introduced the "Three Laws of Robotics" in 1942, he imagined a world where intelligent agents could be governed by simple, rule-like constraints. Today, as AI capabilities accelerate, similar law-like constraints have resurfaced as a serious alignment strategy, such as Anthropic's "Constitutional AI" (CAI) framework or OpenAI's Model Specs. But, as Asimov's stories foretold, crafting and interpreting natural language laws is hard.

This, however, is not a new problem. The legal system has been grappling with the same challenges for hundreds of years. At the core of the challenge is interpretive ambiguity. CAI systems are guided by natural language principles that function like laws. Much like in legal systems, interpretive ambiguity arises both from how these principles are formulated and from how they are applied. While the legal system has evolved various tools (such as administrative rulemaking, stare decisis, and canons of construction) to manage this ambiguity, current AI alignment pipelines lack comparable safeguards. The result: different interpretations can lead to unstable or inconsistent model behavior, even when the rules themselves remain unchanged.

We argue that interpretive ambiguity is a fundamental yet underexplored problem in AI alignment. To build better law-following AI systems, and to construct better laws for AI to follow, we draw inspiration from the US legal system. We introduce an initial computational framework for constraining this ambiguity to produce more consistent alignment and law-following outcomes. In our work, we show how the legal system addresses this, how AI can benefit from similar structures, and how the computational tools we develop can help us understand the legal system better.

Key Takeaways:

Interpretive ambiguity is a hidden risk in AI alignment. Natural-language constitutions induce significant cross-model disagreement: 20 of the 56 rules lack consensus on > 50% of tested scenarios.

AI alignment frameworks lack safeguards against interpretive ambiguity. Unlike the legal setting, current AI alignment pipelines offer few safeguards against inconsistent applications of vaguely defined rules.

Law-inspired computational tools can be leveraged for AI alignment. Computational analogs of administrative rule-making, iterative legislation, and interpretive constraints on judicial discretion can improve consistency across model judgments. We propose a method for modeling epistemic uncertainty over statutory ambiguity and leverage this metric to reduce the underlying ambiguity of rules.

Our computational tools could also be useful for legal theory. They offer fresh methods for modeling statutory interpretation in the legal system and extending classic theories such as William Eskridge Jr.'s Dynamic Statutory Interpretation or Ferejohn and Weingast's A Positive Theory of Statutory Interpretation, which sought to formally model the dynamic external influences on how statutes are interpreted.

A longer version of this post is available here, an accompanying policy brief is here, and the full paper can be found here.

Blogpost authors: Nimra Nadeem, Lucy He, Michel Liao, and Peter Henderson

Paper authors: Lucy He*, Nimra Nadeem*, Michel Liao, Howard Chen, Danqi Chen, Mariano-Florentino Cuéllar, Peter Henderson